For developers and system administrators managing dynamic web applications, the synergy between caching mechanisms and real-time data updates is both a blessing and a curse. Host-level object caching and Redis might seem like a golden combo for performance, but when improperly tuned, they can downright sabotage each other. Understanding this conflict and how Time-To-Live (TTL) settings helped restore harmony can improve app responsiveness, reduce bugs, and lead to better user experience.

TL;DR

Host-level object caching can interfere with Redis’ ability to serve updated data, especially when Redis stores transient dynamic content. This conflict often results in stale data being served for longer than intended. By adjusting the TTL (Time-To-Live) settings within both the caching layer and Redis, developers can fine-tune data freshness and control memory usage. Understanding each system’s role and coordinating their expiry mechanisms is key to maintaining performance without sacrificing data accuracy.

The Role of Host-Level Object Caching

Object caching on the host level refers to server-side cache systems such as APCu, OPCache, or platform-specific configurations like WordPress object caches. These caches store representations of database queries, function results, and serialized objects in memory to avoid redundant processing and database hits.

At surface level, this appears to be efficient performance optimization. However, when combined with dynamic systems like Redis, it can cause outdated or stale data to persist when it’s supposed to be temporary. The cached object in host memory becomes a relic — disconnected from the live state of the app.

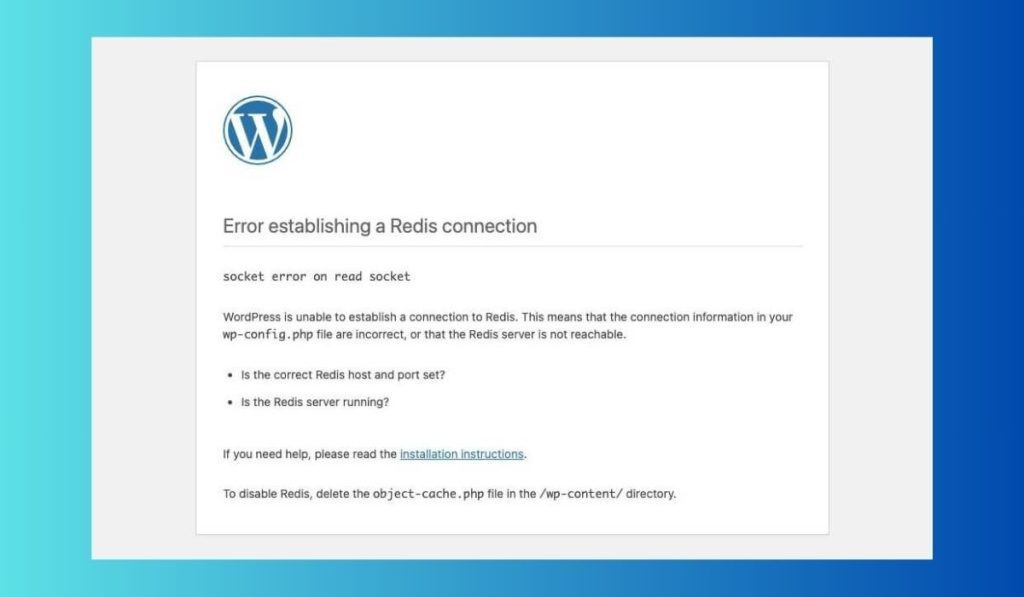

Understanding Redis in the Data Stack

Redis is an in-memory data structure store known for its blazing speed and versatility. It’s commonly used for:

- Session management

- Queue handling

- Transient data like cart tokens or temporary user preferences

- Caching rapidly changing query results or frequently accessed keys

Redis’ Time-To-Live (TTL) functionality allows developers to set a countdown after which the data expires. It’s particularly valuable for managing memory and ensuring content reflects real-time conditions. However, this TTL mechanism gets sabotaged when another caching layer stores the object beyond its intended lifecycle.

The Core Conflict: Host Cache vs. Redis TTL

The primary issue stems from how host-level object caches store data before it reaches Redis again. If data is first queried from Redis and then temporarily saved in the host memory, this copy won’t honor Redis’ TTL. No matter how short the TTL is in Redis, the host cache holds on to the stale copy until its own expiration policies deem fit to replace it.

This leads to surprising outcomes such as:

- Users seeing outdated data even though Redis has already expired it

- Admin updates on the backend not reflecting until host cache clears

- Difficulty debugging issues as Redis appears to be accurate but the served content is stale

A Real-World Use Case: E-commerce Flash Sales

Imagine an e-commerce site running a flash sale. Product quantities change by the second. To keep operations optimal, developers use Redis for managing stock levels in real time. Each product’s quantity is cached with a TTL of 5 seconds to reduce constant database hits and allow fast updates.

However, the platform also uses host-level object caching, which caches the product detail object (including stock) for 10 minutes. This results in users seeing a product as “In Stock” long after Redis has declared it unavailable. Worse yet, customers can add unavailable items to carts—leading to a poor user experience and logistical issues.

The TTL in Redis becomes moot when the host-level cache delivers outdated content. Fixing this required rethinking how TTL policies should align across these layers.

Bridging the Gap with Synchronized TTL Settings

The restoration of dynamic updates came with a key realization: the need to align cache invalidation timings across layers through well-thought-out TTL synchronizations.

Here’s how teams resolved the issue:

- Reduced host-level cache TTL for objects that rely on transient content like stocks, session values, or real-time analytics. This ensured that such objects wouldn’t live beyond their usefulness even in memory.

- Utilized cache-busting keys or versioning: By changing the cache key or tagging it dynamically (e.g.,

product_125_v3), developers ensured a fresh fetch whenever critical content evolved. - Implemented Redis Pub/Sub or keyspace notifications: These built-in features alert the app when Redis data has expired. This allows host caches to react or invalidate their own corresponding keys.

Other Advanced Strategies for Resolution

Beyond TTL tuning, developers adopted advanced patterns that respected Redis’ data freshness:

- Write-through and Write-around caching: These methods ensure that the cache is updated only on data write events, letting Redis act as the source of truth.

- Centralized cache management: Introducing a middleware or cache orchestration layer that handles what gets cached, where, and for how long.

- Distributed TTL policies: Synchronize expiry times across Redis and host cache using configuration management tools like Consul or etcd.

By combining these mechanisms, developers reclaimed control over how data propagated and expired across tiers.

Lessons Learned and Takeaway

The key lesson from this experience is the danger of isolated cache policy design. When building multi-layer caching architectures, especially with a volatile data store like Redis involved, each layer’s cache expiration must consider the others.

Here’s a summary of key best practices:

- Always determine which system—Redis or host cache—is closer to the source of truth for specific data types.

- Align TTL durations based on data volatility and usage patterns.

- Implement versioning or notification-based invalidation where TTLs aren’t practical.

- Test caching behavior thoroughly in staging environments that mirror production data volatility.

Conclusion: Smart TTL = Happy Users

On the surface, caching layers like Redis and host object caches promise nothing but speed. Yet, without strategic synchronization, these layers can miscommunicate and fracture data integrity. Redis TTL is a powerful feature, but its effectiveness depends on the broader ecosystem in which it operates. Only by treating TTLs as multi-layered expiry protocols—not isolated timelines—can developers create fluid, performant, and accurate data delivery systems.

Think of caching not as storage—but as a strategy. When your cache policies communicate, your application earns the speed it deserves, without compromising on truth.