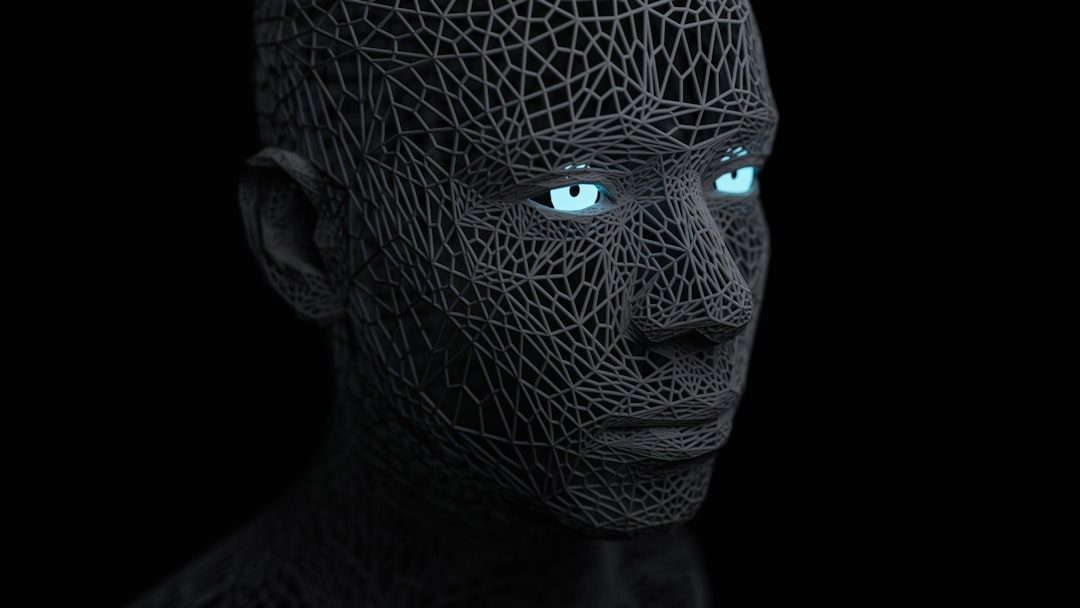

As the sophistication and frequency of cyberattacks continue to escalate, the need for advanced security mechanisms capable of adapting in real time has never been more pressing. In this evolving landscape, multimodal AI detection is emerging as a critical pillar of trust and resilience within cybersecurity ecosystems. By leveraging insights across multiple data types—text, audio, images, video, and behavior—multimodal AI offers a more comprehensive, intelligent, and adaptive approach to detecting and mitigating threats.

TLDR:

Multimodal AI detection is becoming an essential component in modern cybersecurity strategies due to its ability to analyze diverse data sources such as audio, text, video, and behavioral patterns. This approach significantly strengthens threat detection by reducing false positives and keeping pace with evolving attack vectors. With traditional single-modality detection struggling to counter highly integrated, cross-platform threats, multimodal AI serves as a robust trust layer that reinforces resilience, intelligence, and adaptability in organizational defense systems.

Why Traditional Detection Methods Are No Longer Enough

Cyberattacks have grown far more complex and multidimensional, often integrating several channels for maximum impact. Phishing campaigns may now include fake voice messages, forged documents, and manipulated videos, all used together to deceive victims. Legacy systems, which rely heavily on signature-based or single-modality detection, are often incapable of responding to this level of sophistication.

Traditional tools are like attempting to read a book one word at a time and hoping to understand the context. They lack the holistic view needed to piece together seemingly unrelated indicators of compromise. As attackers become more agile and deceptive, defenders must match or exceed that agility. This is where multimodal AI steps in.

What Is Multimodal AI and Why It Matters

Multimodal AI systems integrate and analyze information from various data types to build a richer, more contextual understanding of the environment. These modalities can include:

- Text: Email content, chat logs, logs from applications

- Audio: Voice messages, command inputs, recordings

- Visuals: Screenshots, videos, security footage

- Behavior: User navigation patterns, time of day access, geographic tracking

By fusing inputs from these varied sources, multimodal AI detection engines can connect dots that would otherwise remain isolated. For example, a user logging in from two different locations within minutes may now also be flagged if their voiceprint differs subtly in recorded customer support interactions.

How Multimodal AI Establishes a Core Layer of Trust

Trust in cybersecurity means being able to confirm with high confidence that entities—be they systems, users, or requests—are legitimate. Multimodal AI strengthens this by:

- Enhancing Detection Accuracy: By synthesizing multiple sources of data, the chances of a false positive or negative reduce significantly.

- Dynamic Threat Response: Unlike static rule sets, AI can evolve its understanding and reaction based on new behaviors it learns across modalities.

- User Authentication Strengthening: Combining facial recognition, typing cadence, and voice can provide multi-factor biometric verification.

- Anomaly Detection in Real-Time: Immediate flagging of risks across cloud services, endpoints, and networks through collaborative data streams.

This approach not only enables detection of explicit threats but also uncovers subtle and previously unreachable vulnerabilities. It becomes the connective tissue across disparate security systems, offering a single pane of truth reinforced by multiple dimensions of evidence.

Real-World Applications in Cybersecurity

Organizations across various sectors—financial services, healthcare, government, and education—are increasingly integrating multimodal AI into their cybersecurity infrastructure. Below are some key use cases:

1. Advanced Phishing Detection

Phishing emails today may come with deepfake attachments or voice prompts to call a number. A multimodal AI system can analyze the email content, assess the legitimacy of embedded audio, and cross-reference behavioral patterns of the recipient to recommend caution or block the threat automatically.

2. Insider Threat Monitoring

By evaluating access patterns, keystroke dynamics, and even emotional tone in internal communications, organizations can identify signs of data exfiltration or sabotage faster than would be possible through logs alone.

3. Fraud Prevention in Financial Transactions

Banking AI can monitor visual account verification (like ID images), match it with real-time audio or facial input, and compare transaction patterns against a user’s historical behavior to flag anomalies.

The Synergy with Zero Trust Architecture

The shift toward Zero Trust architecture—where no user or device is inherently trusted, even inside the network—is perfectly aligned with the capabilities of multimodal AI. In a Zero Trust model:

- Continuous verification ensures constant monitoring of identity and device behavior.

- Least privilege access restricts resources based on verified needs.

- Segmentation and automatic isolation can be triggered when AI detects unusual behavior across any modality.

Multimodal AI acts as the sensory system in this architecture, continuously gathering and interpreting data to guide fine-grained access controls. This elevates decision-making from rule-based logic to intelligent, context-aware processing.

Challenges and Ethical Considerations

Despite its promise, implementing multimodal AI in cybersecurity is not without challenge. Key concerns include:

- Data Privacy: Collecting behavioral and biometric data raises ethical concerns around consent and surveillance.

- Bias in AI Models: Misinterpretation of cultural, linguistic, or demographic indicators can lead to biased actions by the system.

- Complexity in Integration: Seamlessly fusing multiple data streams into coherent outcomes requires advanced infrastructure and talent.

To mitigate these, firms must ensure transparency in AI governance and adopt standards that prioritize civil liberties alongside security. Regulatory bodies are beginning to issue guidance on responsible AI use, which should be closely followed.

The Future Outlook

As threats become increasingly polymorphic and identity-based, multimodal AI is set to anchor the next wave of cybersecurity innovation. The convergence of technologies like 5G, edge computing, and quantum-resistant encryption only broadens the attack surface. In such an environment, relying solely on linear, single-layered defenses is not just inadequate—it may become dangerously obsolete.

Forward-thinking organizations are treating multimodal AI not merely as a technical enhancement but as a strategic differentiator—a new digital immune system capable of detecting, analyzing, and responding to threats in fractions of a second.

Conclusion

In a rapidly evolving cyber threat landscape, building trust must be adaptive, intelligent, and context-aware. Multimodal AI detection systems answer this call by combining insights from various modalities to offer stronger, real-time protection. As organizations continue to digitize and interconnect their operations, embedding such AI-driven systems will become essential—not optional.

Savvy enterprises are already leading this shift, laying a robust foundation of trust that goes beyond passwords and firewalls. It’s no longer about simply defending the perimeter—it’s about understanding every signal, in every context, and responding at machine speed.